Up: presentation-increasing-response-rates-incentives

“Money Will Solve the Problem”: Testing the Effectiveness of Conditional Incentives for Online Surveys

Reading: DeCamp, Whitney, and Matthew J. Manierre. 2016. “‘Money Will Solve the Problem’: Testing the Effectiveness of Conditional Incentives for Online Surveys.” Survey Practice 9(1): 1–9. doi:10.29115/SP-2016-0003.

Overview

- This study checks if offering money after completing an online survey gets more college students to respond and makes the group of responders more like the whole student population.

- They tested 2, or no reward with 1,000 undergrads at a Midwest university.

- The $5 reward got more students to respond and made the sample closer to the real student mix (like gender and grades),

- but didn’t change the survey answers much.

What They Did

- Why They Did It: Getting people to fill out surveys is tough, especially online. In paper surveys, giving cash upfront works great (like a 19% boost, per a 1993 study). Online, it’s hard to send money first, so they tested giving money after the survey (called conditional rewards) to see if it helps get more responses and a better mix of people.

- The Plan:

- Who: 1,000 full-time undergrads at a big Midwest university, picked randomly.

- Groups: Split into three:

- No-Reward Group (600 students): Just asked to do the survey.

- **2 on their student ID card after finishing.

- **5 on their ID card after finishing.

- Students could choose if the money went to the bookstore (sells lots of stuff) or dining (food options on campus). Everyone has an ID card, so the reward was useful.

- Survey:

- About “college behaviors” (like drinking, cheating, or being a victim of theft).

- Sent by email with a unique link (no login needed, stops repeat answers).

- Emails went out in fall 2012, starting week three of classes. Reminders were sent on days 4, 9, and 17 to those who hadn’t responded (only 4% opted out).

- What They Checked:

- Response Rate: How many students did the survey.

- Who Responded: Compared responders to all students using university data (gender, GPA, race, etc.) to see if the sample was similar.

- Survey Answers: Checked if answers (like drug use or crime) differed between groups.

- How They Thought It’d Work:

- Used “leverage-salience theory,” which says rewards make people notice the survey and feel it’s worth doing, especially if the money’s bigger.

- Guessed: 2 or nothing, the responders might look different (like more men or women), and answers might vary.

What Happened

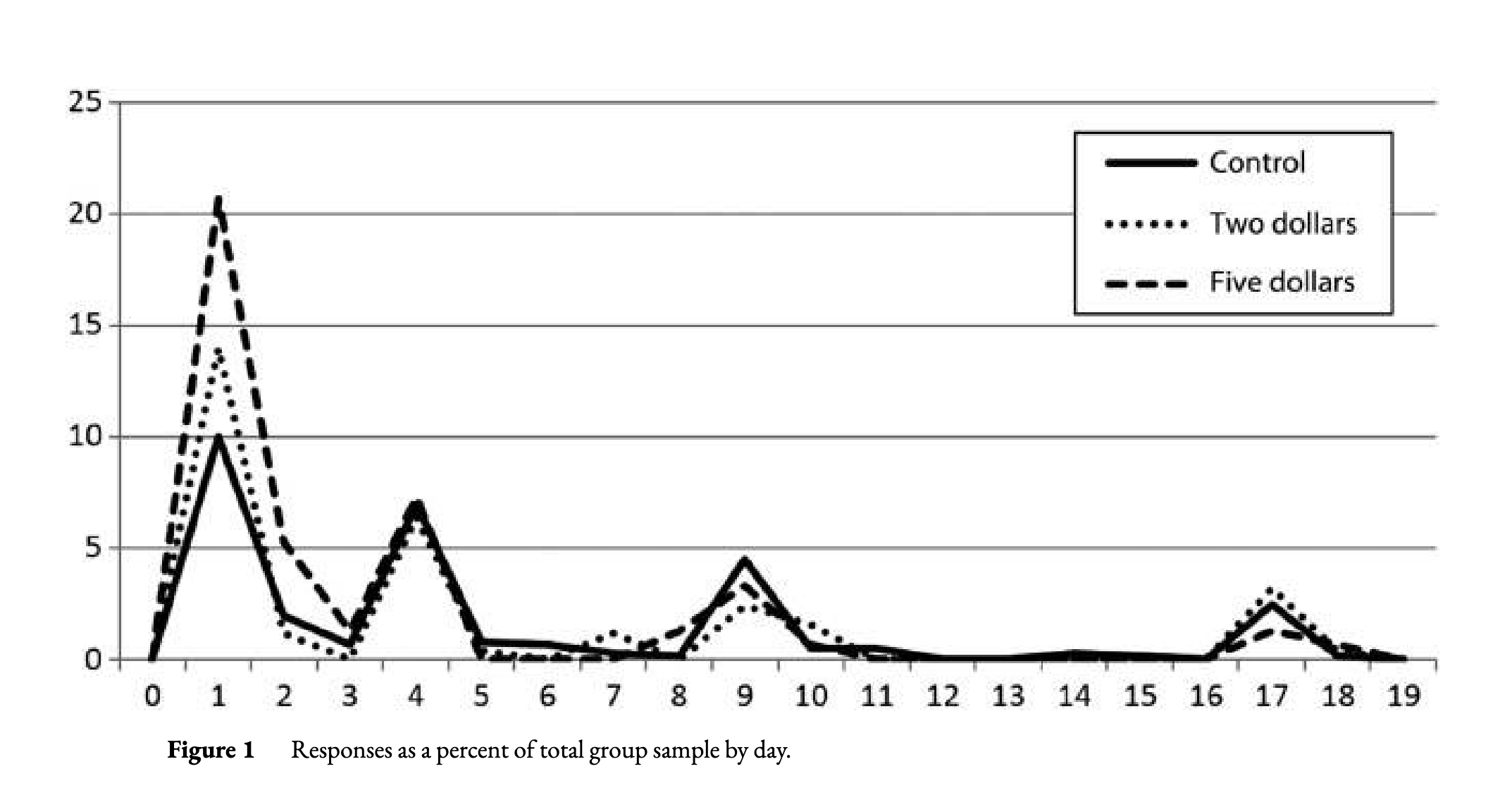

- How Many Responded:

- Overall, 322 students (32%) did the survey.

- No Reward: 30% (182/600) responded.

- $2 Reward: 31% (77/250) responded.

- $5 Reward: 42% (63/150) responded.R

- The 5 got people to act fast.

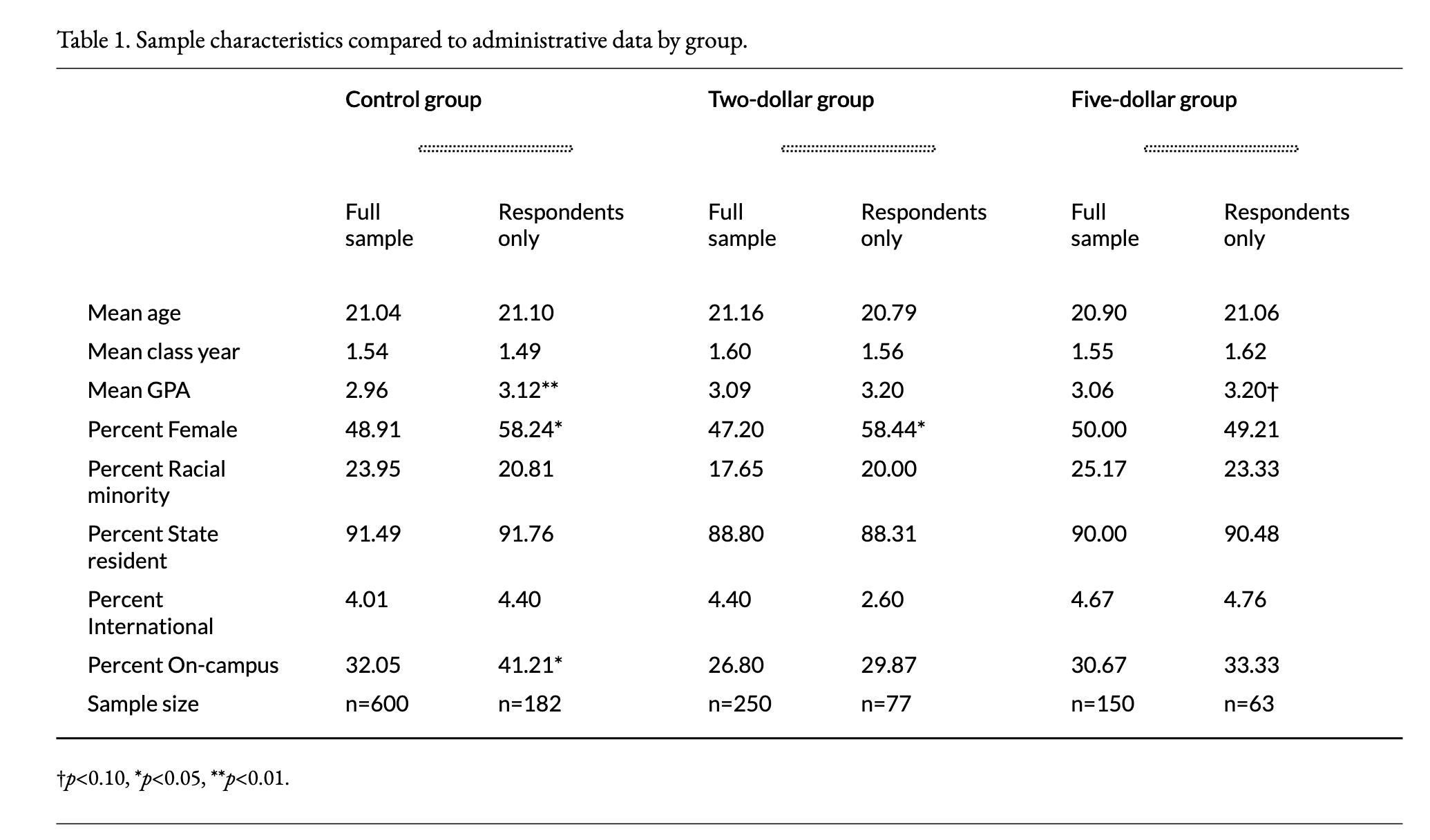

- Who Responded (Compared to University Data):

- No-Reward Group: Had too many women (58% vs. 49%), higher GPAs (3.12 vs. 2.96), and more on-campus students (41% vs. 32%) than the real student mix.

- $2 Group: Also had too many women (58% vs. 47%), but GPAs and campus living were closer to normal.

- $5 Group: Almost matched the real mix—right gender balance (49% women vs. 50%), GPA close (3.20 vs. 3.06), and on-campus living (33% vs. 31%).

- Takeaway: $5 made the responders look more like all students, especially for gender and living situation.

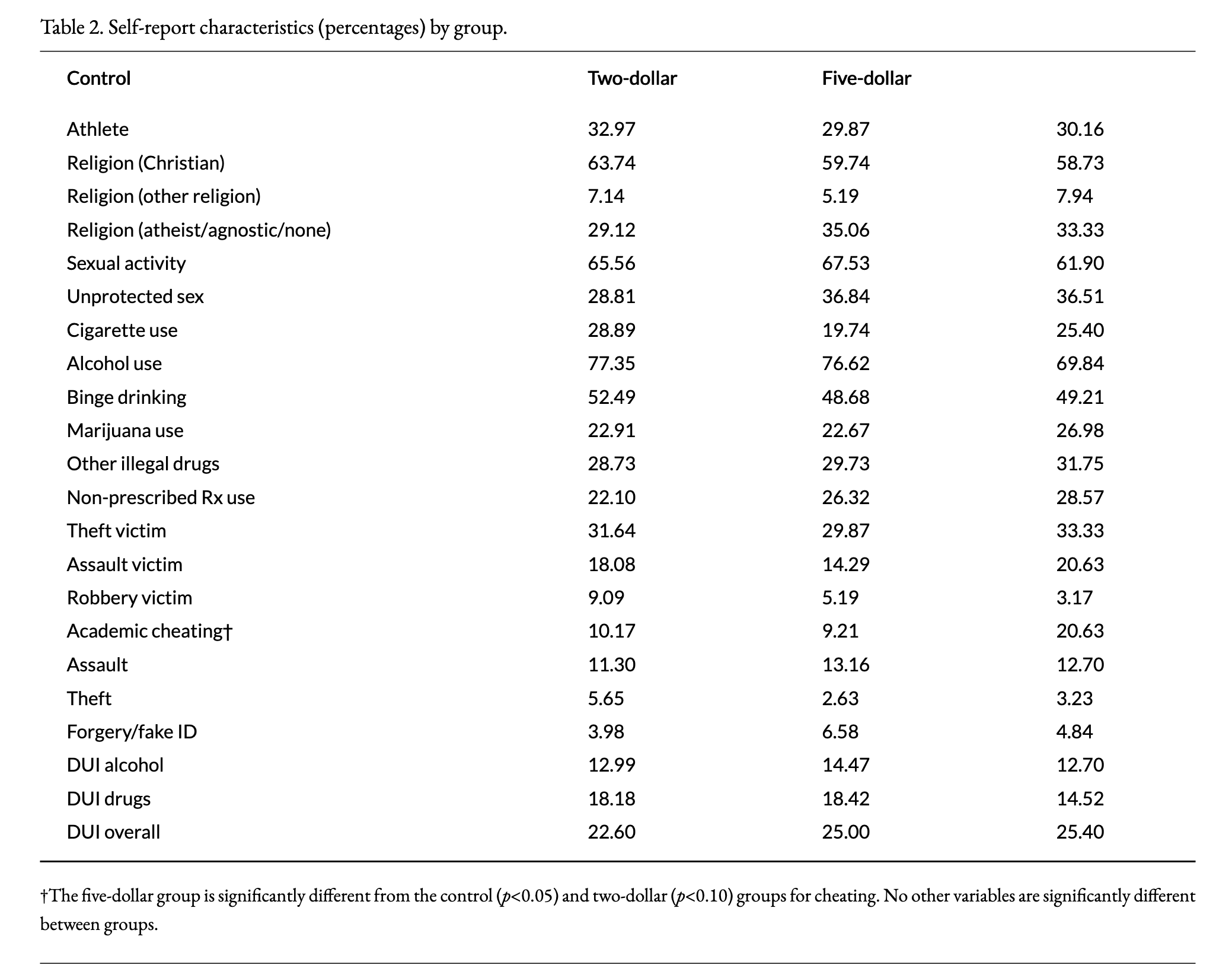

- Survey Answers:

- Asked about stuff like drinking (e.g., 70–77% used alcohol), drug use (e.g., 22–27% used marijuana), cheating, or being a victim.

- Almost no differences between groups. Only one thing stood out: 21% of the $5 group said they cheated on exams vs. 10% for others (p < 0.10). But with 22 questions, one difference might just be random chance.

- When they averaged differences across all questions (like a “deviation score”), groups were super close (less than 1% apart). So, rewards didn’t really change what people said.

What It Means

- Big Points:

- A $5 reward after the survey got 12% more students to respond (42% vs. 30%) and made the group more like the real student population (better gender balance, closer GPAs).

- A $2 reward didn’t do much—response rate was almost the same as no reward.

- Survey answers stayed the same, so the extra responses didn’t change the results.

- Why $5 Worked:

- Bigger money caught more attention (like leverage-salience theory says).

- Letting students pick bookstore or dining credit made the reward feel useful.

- $5 might’ve pulled in students who usually skip surveys (like men or off-campus folks).

- Why $2 Didn’t:

- Too small to care about, especially for busy students.

- Didn’t stand out enough to make people act.

- Tips for Online Surveys:

- Offer $5 (or more) after completion to get more responses and a better mix of people.

- Skip $2 rewards—they’re not worth it.

- Let people choose how to use the reward (like bookstore vs. food) to make it appealing.

- Send reminders, but focus on early responses (most come in week one).

- Downsides:

- Only tested college students, so might not work for others (like older adults).

- Used ID card credits, not cash, which might not work everywhere.

- Small groups (especially $5 group with 150 students) might miss small differences.

- Didn’t compare to other rewards (like lotteries or prepaid cash).